Smooth eye movement interaction using EOG glasses

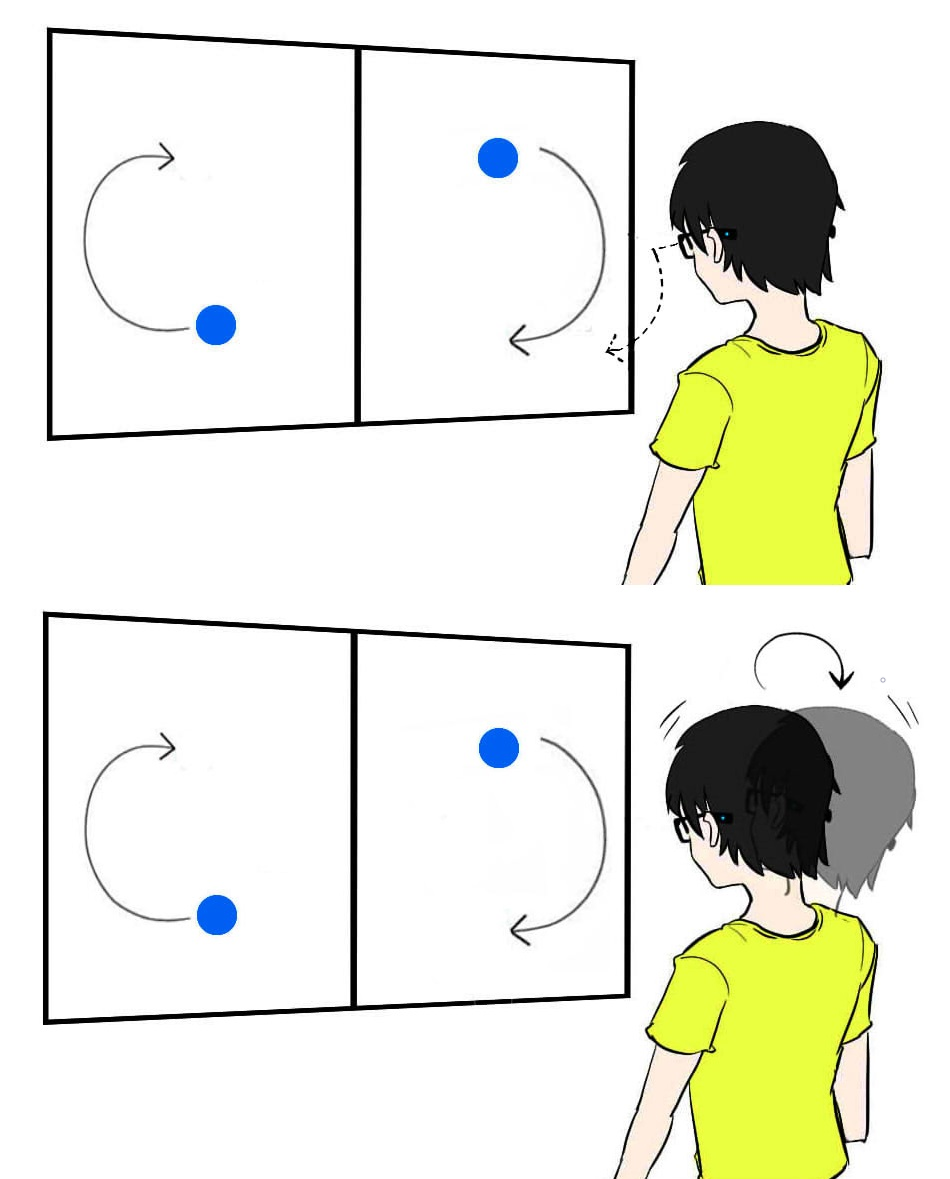

Abstract: Orbits combines a visual display and an eye motion sensor to allow a user to select between options by tracking a cursor with the eyes as the cursor travels in a circular path around each option. Using an off-the-shelf Jins MEME pair of eyeglasses, we present a pilot study that suggests that the eye movement required for Orbits can be sensed using three electrodes: one in the nose bridge and one in each nose pad. For forced choice binary selection, we achieve a 2.6 bits per second (bps) input rate at 250ms per input. We also inntroduce Head Orbits, where the user fixates the eyes on a target and moves the head in synchrony with the orbiting target. Measuring only the relative movement of the eyes in relation to the head, this method achieves a maximum rate of 2.0 bps at 500ms per input. Finally, we combine the two techniques together with a gyro to create an interface with a maximum input rate of 5.0 bps.

Appeared:Junichi Shimizu, , Murtaza Dhuliawala, Andreas Bulling, Thad Starner, Woontack Woo, Kai Kunze, "Solar system: smooth pursuit interactions using EOG glasses" Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, doi:10.1145/2968219.2971376

Murtaza Dhuliawala, , Junichi Shimizu, Andreas Bulling, Kai Kunze, Thad Starner, Woontack Woo, "Smooth eye movement interaction using EOG glasses" Proceedings of the 18th ACM International Conference on Multimodal Interaction, doi:10.1145/2993148.2993181